California merchants Tuesday moved one step near the guardrails around Proficial Intelligence.

Senate passed the bill aimed to make chatbots used for friendships safe after parents raised their mental health concerns.

The Law, now referring to the California state meeting, indicates how world merchants cope with safety problems around AI as technical companies release many AI enabled tools.

“The world looked again at California to lead,” said Sen. Steve Pardilla (D-Chula Vista), one of the legislation introducing the bill, Senate on Senate.

At the same time, beaters who beat they try to estimate the anxiety that can prevent new art. Groups against that bill such as Electronic Frontier Foundation The law is very wider and will enter free speech issues, according to the analysis analysis.

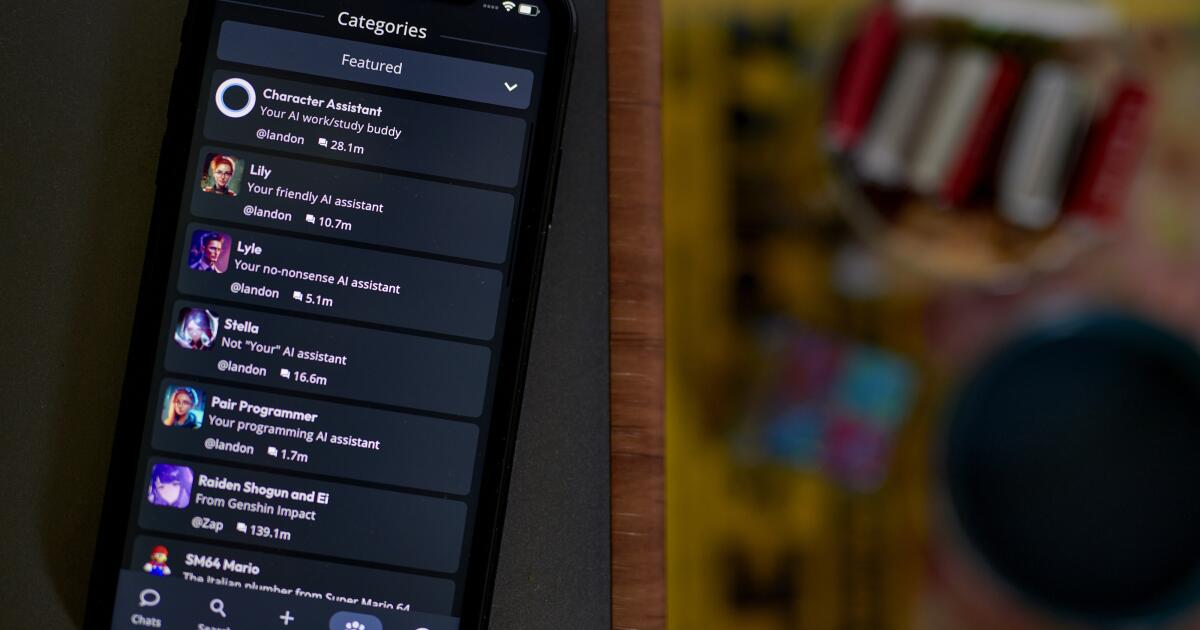

Under the Senate Bill 243, Chatbot partners’ workers reminded users at least every three hours that Virtual characters are people. They will also disclose that Chatbots do not fit for other children.

The platform will also need to take some steps such as the Protocator Act by observing suicidal views, suicide or injuries expressed by users. That includes indicating users of suicidal prevention services.

To prevent restricting resources and problems

If you or someone you know struggle with suicidal thoughts, seek help from expert and call 9-8-8. The United States’ First Region in the Health Society of Social Health Organization 988 HEMAL HEALTH will link trained callers to trained professionals. Text “Home” to 741741 in the US and Canada to reach a line of disaster text.

The operator of this Pulatforms will also report the number of chatbot chat partner and provide suicide or function with the user, and other requirements.

Dr. Askerson Wer Pierson, who is one of the authors working well. Chatbots, says Senator, which will be built so that people care for children.

“When a child begins to choose ai connection over a person’s real relationship, that is about,” said Sen. Weber Pierson (D-La Mesa).

The Bill defines the Chatbots who are partners as AI systems are able to meet the social needs of users. Does not include Chatbots used by businesses to operate customer service.

The law was tested for support from the parents who lost their children after starting chatbots. One of those parents, the Megan Garcia, a Florida mother and a character.

In line with Milo, platform discussions said the mental health of his son and failed to inform us with help or provide help when expressing suicidal thoughts on these visual characters.

The character.Ai, based on the Menelo Park, Calif. This company has said to take young people seriously and released a feature that gives parents further information about their children spending with Chatbots on the platform chatbots.

The character is a corporate court for the case, but the corporate judge in May allowed the case to continue.